I never said I was consistent. The horror aesthetic is in right now, and creeping doom is on every horizon, but that’s more the state of the gaming industry than its content. Perpetual experiences and micro-transactions don’t lend themselves well to producing horror games, even if they do inspire dread. So, it was either ride the Dark Souls wave or write about the only thing more horrifying than creeping existential horror: politics.

Not here, though. No, the personal is political, so I can excuse the evening off from charting our winding path into a coiling snare. There are so many things in this world that would bind us and use us that one can hardly keep track. Sorry, got kinky there for a second, but it speaks to the question that faces most artists in today’s market.

There are many ways to read Velvet Buzzsaw, and I’ve spent a lot of time avoiding outside interpretations of the movie, except the initial review that led me to it. I wanted to remain surprised, because I was promised a character-focused horror movie with great cinematography and a twist. I got a lot more than that, though, and I want to give you the same opportunity I had to enjoy it. So, here’s your spoiler-warning. From here on out, I’m going to be spoiling major plot-points and the central gimmick of the twist, as well as dissecting what I see as the ethos of the film. There will be no coming back from these spoilers, soo…

SPOILER WARNING!!!!

That being said, let’s start with the exploiters of artists and the gate-keepers that enable the scarcity they profit off of. I mean, the cast. Morf Vandewalt is a renowned art critic that is insulated by pride, pretence and pomp. He acts as a gate-keeper of value in the artistic world that “assesses out of adoration,” but the film goes out of its way to demonstrate his profound naivety towards the powerful people and institutions that profit off his work. This is a great example of the lighting direction and framing coalescing with the narrative at the perfect moment for a story beat.

Up until this moment, Morf has been framed as a suave, sophisticated socialite whose words can move mountains. But here, for one moment, his hair is under-lit to make it look like a bowlcut, as Morf is saying something that we know isn’t accurate with absolute confidence. He is, in truth, incredibly childish. You can see it in his demands and complaints. As a critic, Morf is being used to promote dangerous items for dishonest people, as well as destroying the careers and lives of countless artists to further his cachet. He excuses his actions by claiming that his opinions are honest, and they do seem very genuine, but he does tear down Ricky for Josephina. He knows it is wrong, though, and he even gets moments of moral indignation by refusing to sell out his personal beliefs. Morf is not morally corrupt, but he’s not overtly critical of the systems off of which he profits.

You know who is morally corrupt, though? Rhodora Haze, former punk artist turned wealthy socialite. As the owner of the Haze Gallery, she deals in modern art and Machiavellian politics. She’s manipulating Morf behind the scenes, holding contracts like switch-blades under the throats of other characters and stage managing the launch of the Vetril Dease Collection: a series of paintings that were discovered after the artist’s death. Since the artist is dead and the owner of the art is under her thumb, she is free to lie about the paintings at will. She squirrels half of them away to increase their scarcity value.

Rhodora’s mirror-mirror doppelganger is Gretchen. As a newly-minted art advisor/gate-keeper, she’s trying to survive in the same world of money and perception as Rhodora, but she’s nooot as good at it. In her stumbles, we see the ugly seams of their art scene come blatantly to the surface. She tries to bribe Morf to point her to “under-valued, pre-review art,” because she sees Rhodora profiting by buying pieces before Morf’s favourable reivews go live and increase their value. Of course, Rhodora isn’t bribing him; she’s spying on him. Despite Morf’s integrity, he exists in a system where his favourable reviews can be fore-casted by the people who profit off of them. An uncomfortable reflection of the game review space I left all those years ago? Maybe that’s why we’re here…

Let’s not get TOO meta, TOO fast. Where were we? Josephina is another inductee into the world of power and politics. As an employee of Rhodora, she stumbles across the paintings of Vetril Dease on the evening of his death. Recognising his brilliance and claiming them for herself, Josephina empties the man’s apartment of his life’s work. It’s clear that Rhodora took her under her wing as a employee, but is in the process of demoting her when she’s tipped off to the existence of the Dease collection. Josephina is strong-armed by Rhodora, under threat of contractual litigation, into handing over management rights for the Dease Collection in exchange for wealth and prestige. She agrees, because she has no choice.

Jon Dondon is a competing art dealer that learned well from Rhodora. He spends his days trying to poach artists from the Haze Gallery and sabotaging their stars. Jon Dondon puts considerable effort into undermining the reputation of the Dease Collection before meeting his bitter end. His body is discovered by Coco.

Coco is the mirror-mirror opposite of Josephina. She’s working an entry-level position at the Haze Gallery and learning how to navigate the choppy waters of a cut-throat industry. I have a fan-theory about her. I think she sabotaged Josephina in the opening act of the movie by calling her Right before work to tell her about her cheating ex. When Josephina was moved off the front line, Coco got her job. A job she immediately lost, because Rhodora uses and discards people at will, but it’s clear that she’s learned how to undermine people in order to advance her interests. Yet, the film doesn’t totally condemn her for her actions. Coco’s trying to survive in a harsh market, and it’s causing her to compromise her integrity. At the mid-point of the film, she reveals that she’s been spying on Rhodora, and the information she gleaned gets her a new job. So, she’s dishonest, buut…

Which is really more of a condemnation of the system she wants to profit off of than her as a character. At least, in relation to the other characters in this little psychodrama. She keeps losing her job, because her employers keep dying for their sins, but she survives, even if her dream is broken.

That’s most of the main cast. There’s the blue-collar Bryson, who exists as a stereotype both so broadly drawn and utterly eclectic that I can only call him a douche-hipster. Piers is an established artist that is struggling to find creative truth in a system that values constant exploitable productivity. Damrish is an emerging artist from the streets that wants to find a place where his work can mean something, caught between financial success and cultural relevance.

The last two members of the important players are almost uncredited. They are the representatives of the Museum of Modern Art, and only one of them gets a name. It’s Jim, and his unnamed partner says one of the most important lines in the film to Gretchen: “…If you wanted to ram more of your collector’s hoard down our throat to increase their value, you should have done so before the Dease deal was locked…” You see, there’s only so much room in a gallery to show off cultural artefacts, so curators have to be careful with their space. As an artistic institution, the museum has a responsibility to the integrity of the art world, but the leverage provided to Gretchen by the inflated value of the Dease Collection gives her power over their investors. The wealthy gate-keepers of the art world have lent her the pictures and capital necessary to dictate what cultural artefacts will be displayed as valuable in the museum. She does to them what Rhodora did to her by packaging Dease with another artist named Minkins. In this scene, the film is saying that the power structures that profit off of exploiting artists do not elevate art based on its cultural value but on its ability to be exploited for profit. Therefore, the exemplars of this cultural space did not gain ascendancy through cultural relevance, but through systems of power. Even if the art is only valuable for the moment:

From EA to Disney, this is the relationship that most artists find themselves in today. The gate-keepers of artistic value are propped up by towers of money, and it seems like the only way forward is to sell-out for profit. As Gretchen says, “Look, I came to the museum, because I wanted to change the world through art. But, the wealthy vacuum up everything, except crumbs. The best work is only enjoyed by a tiny few. And they buy what they’re told. So why not join the party?” These are not evil people, but they’re still profiting off of a system that locks culture and the ascendancy of artistic vision behind pay-walls and monetary access. So, what should an artist do in this environment? What is the point of art if nobody sees it?

It turns out that the film has really optimistic answers to both those questions, but I want to address two very important aspects before we get to those answers. The first is that the paintings are haunted by the ghost of Vetril Dease, because he literally put his blood, sweat and tears into his work. His pain and madness are seared into his tormented works, and their raw manifestation is what ensorcells both critics and artists alike. This life of misery, “A howl for answers and a resolution that never comes,” is the fascination point off which the galleries will profit. They’re capitalising on the dead man’s pain.

The second aspect is the way in which Dease’s media is altered for consumption. Not a single stroke on his paintings is changed, but when they are removed from the context of his tiny, ramshackle house, they lose their connection to their meaning. Dease faced serious abuse, and his work was a means of processing that trauma. His howl for answers reveals the depth of that pain, but that depth is completely removed by transplanting it to a gallery space for safe consumption. In a gallery, people see the brilliance and clarity of the emotion, but they are insulated from its impact. Capitalism sanitizes media and removes it from the consequences of its context, a point which the film makes extremely explicit:

Everyone thinks that Gretchen’s death is part of the exhibit, so they’re removed from the reality of the situation. The children in this scene think it’s fake blood; they assume that everything in the gallery is safe for consumption, so they are protected from its implications. The paintings and sculptures in this movie could stand in for any form of media. By removing media from its context and making it safe for capitalistic consumption, we are insulated from its true meaning, thereby allowing the framers of that information the power to control our perception of it. Which gets even more interesting when you consider that Damrish is a culturally-relevant Black artist being tugged away from his artist’s collective to pursue success in a mainstream gallery

It should not be ignored that the history of African Americans in most artistic mediums includes a long history of exploitation. That the gate-keepers of power in this movie are primarily white, and that systemic socioeconomic differences are of key importance in relation to their access to power. No one in this movie is anything close to racist, but they are unquestioningly profiting off systems of exploitation built on inequality. You could not have an honest psychodrama about the flaws inherent in media exploitation without bringing up the rotten foundation on which it is built. This applies to all vulnerable populations across the media landscape, from Freddy Mercury to the Jackson 5. While naming those two makes it seem like these were relationships of mutual profit, we’d do well to remember the barriers they faced and the artists of all stripes and orientations who see nothing for their life-times of work. The more vulnerable you are, the easier you are to exploit, which is why some populations are targeted more explicitly than others.

Everyone in this movie is duplicitous to some degree. They’re all stage-managing personas and stabbing each other in the back behind warm smiles and “Kisses!” They are the perpetual manifestations of this psychodrama, profiting with cold brutality as they devour warm bodies with neat, sanitized pretence. It’s the same cheerful nihilism on display when the News feeds us justifications for murdering people and presents the deaths of thousands as an engaging tragedy. There is no human way to easily digest the deaths of that many people, but when it scrolls by on your news feed, it feels like one more point in a burning world. Something you can accept and “deal with.”

The personal is political, and the most important point of conflict in the daily lives of most people watching this movie will be fighting the apathy bred by comfort. The most important weapon against action is the idea that everything will be okay. By making things safe and digestible, we never feel the true weight of the tragedies happening around us. There is an art to insulating us into inaction, while at the same time justifying murder. It is the howling storm into which we all must scream.

That brings me back to the haunted paintings and my two final questions. What is the point of art if nobody sees it? I think the film answers that rather handily on multiple occasions. An artist’s primary concern should be the act of creation. “All art is dangerous,” which is to say that all art is powerful. Dease created otherworldly paintings as a byproduct of using art as a tool for exploring his trauma and grief. He survived a life of torment through art, even while definitely committing some murders along the way.

At the end of the film, we have an extremely human moment with Rhodora as she hands the keys of her beach house to Piers. Piers has been struggling with alcoholism and the media-driven idea that artists flourish by sacrificing their sanity to addiction and mental illness. Grappling with the raw honesty on display in Dease’s work, he wants to keep the “easy answer” at arm’s length, while he “tries to get back to creation.” The art world only values him as long as he’s creating work that they can sell, and it seems like the only answer to creating that work is sacrificing his mental well-being. Which is when the ghost of another dead artist steps in. Rhodora’s old girlfriend Poly -who was killed by a similar life- whispers a quote into the chill winds of history that saves Piers, “Dependency murders creativity. Creativity plays with the unknown, No strategies exist that can enclose the endless realm of the new. Only trust in yourself can carry you past your fears and the already known.”

Dependency on liquor and the financial approval of others is murdering Piers’ creativity, while the addiction slowly kills him. Rhodora tells him to take a break from the art scene, because she’s aware of its potential for toxicity. He needs to heal. She tells him to go somewhere and do something for nobody but himself. Because, at the end of the day, You always see your art. What is the point of art if nobody sees it? None, but the artist always sees their work. Art is a tool for expression and self-discovery. The finished product has inherent value to the artist and naturally reflects the time-period of its creation. At the beginning of the film, Rhodora posits that her art world has been thriving since a caveman charged a “bone to see the first cave painting,” tacitly suggesting that art and capitalism are inherently intertwined. Which perfectly reflects her world-view, but it has nothing to do with reality.

While it’s true that pretty shells and intricate carvings have always been traded as valuable, the drive to create is inherent to the human experience. Whether it’s ancient, crumbling paintings or lines in the sand swept away by the tide, the act of creation is inherently valuable. When we place importance on the exploitability of the finished product, rather than the act of creation, we murder creativity. So, what should an artist do in this environment?

Damrish’s story-line follows this logic to its fruition. The personal is political, so we should create for each other in an accessible space. Instead of allowing ourselves to be exploited for personal economic gain, we should look to the good we can do in our community as artists. If you have a voice, then we should use it with thoughtful purpose. At the same time, the collective in the film suggests that if this is the case, then the community needs to be there to support these artists. As someone who wrote free articles for years, I can tell you that you can’t dine on artistic integrity. We need to respect each other and our art, because our creations hold value. Both for ourselves and the future generations that will try to understand us through the exemplars of our culture.

Then, there is the other artist: Vetril Dease. The vengeful spirit left to toil in obscurity, exploited after death without the consent of his personal demons or consideration for the private pain it would unearth. His flesh was sold at auction with each painting, his literal body of work was violated for profit. Artists often sign away the right to control their work in exchange for the ability to create it, especially in the gaming space. Their legacy as creators is controlled as if they were a meaningless incident of its creation. The sole reason I’m writing this review, now, is that I kept asking myself one question: “What is the motivation of the paintings in this movie?” What injustice do the demons locked away behind Dease’s rage seek to correct? In looking for this answer, I came to my thesis for the film: “By making media fit for consumption by cultural institutions propped up by capitalist markets, we sanitize pain and profit off of death. Art that is both personal and culturally relevant/transgressive will/should undermine the power structures that enable the exploitation of art and humans, so that no more people must die in painful obscurity while the rich profit off their work.”

Each death in the movie reflects this motivation. Bryson was supposed to take the Dease paintings to be locked away in a warehouse, but he clearly died on a country road in the middle of stealing them. Rhodora kept Dease behind lock and key, both preserving the paintings he wanted destroyed and setting his work up to be exploited. Josephina stole his work and sold it, despite his wish that it be destroyed. John Dondon dug up Dease’s tragic past, but he was killed before he could use it to undermine the value of the work and profit off the destruction of his competitors through it. Gretchen was killed moments after threatening to use Dease’s work to destroy the hopes of emerging artists, keeping undiscovered artists like Dease in the dark forever to profit off the increased the value of Dease’s posthumous work. Morf kept emerging artists from the public’s eyes by judging their work to be valueless, profiting off their destruction to the point that one of his targets killed himself. Further, Morf stood to make millions from an exclusive Dease book-deal. Before dying, he publishes a tell-all article on Dease’s tragedies and the deaths connected to his work. It is telling that Morf was assumed to be corrupt by people like Gretchen, and that his credibility was only ever destroyed when he told them a bizarre, uncomfortable truth.

Damrish and Piers don’t die, because despite their exposure to the work, they never exploited it. Coco kept losing her job, so she never made a dime off it, either. She lost her dream but not her life. She’ll return home and find something new. And Dease’s art? It’s still out there, circulating out of stolen crates. Which makes me wonder… out of the context of these exploitative systems, will his art still be dangerous, Or was his art most impactful/destructive within this system, because it sought to tear down the power structures that exploited it? Am I reading too much into this? Possibly.

These paintings reflect a dark theme that stretches all the way back to “The King in Yellow.” A terrifying work that inspires heights of artistic excess or the lows of human suffering. Artists often see in works of this amorphous, eldritch nature a kind of creeping wonder. Am I divining something that exists or creating an idea from whole cloth? Kind of meta in a sense, because it comes back to why I’m here and often not here.

I created this blog to “forward the realm I analyze,” as Morf would say, but I found myself in the same position a lot of the time. Discussing gaming at this moment is putting support behind companies that are busily exploiting and destroying the works and artists that I admire. I couldn’t allow myself to be part of that anymore, even if the encroachment of larger investors opened the doors for greater profit. I have zero desire to be chewed up and spit out by an ungrateful industry, but I will always love gaming.

Yet, in my absence, I’ve seen the work of people like Jim Sterling and others pushing back against the glorification of exploitative excess, and I see in that movement a future for gaming. We’ve been here before, so maybe it’s better to stand and fight. These exact same historical beats have been playing out over time, from 3-D to the syphilitic collapse of the AAA Industry from its moulding throne. As we know, from the ashes of failure rise the Embers of a new age, and I’ve been heartened by the growth of the indie scene on Steam. Its gluttonous collapse after Steam Greenlight seemed to me the absolute excesses of Dark Souls’ Humanity. Even I’m back here, writing about the revolutionary potential of an above-average horror movie churned out by a corporate mechanism designed to respond to Disney’s global I.P. Power Grab.

Still, we must shout into the howling gale. We must not place the value of creation on the exploitability of the finished product. In this life, we must live and create, finding joy and integrity where we can make it. I have no mouth and I MUST scream.

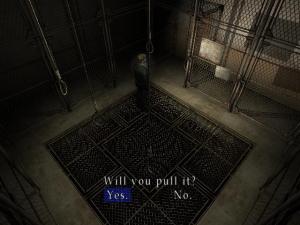

Also, I wanted the opportunity to clear up a misinterpretation of my earlier assertion that reaction-time was an important consideration in relation to tank-controls on early survival horror games. Yeah, the tension-and-release cycles are still effective, even when the controls are fluid, because they speed up the monsters, too. The point isn’t the movement of the characters on the screen. The point is the experience of the individual reacting to them. So, if both characters are slow, then tension rises as the character prepares to react, then executes and experiences the results of those actions. The timing of that cycle is the important thing, not the speed of the pixels on the screen. When you’re running away from Pyramid Head’s attack in Silent Hill 2, you have a fraction of a second to react, then pick a direction and run. The tension exists in the execution of the maneuver, even if success or failure are decided at the beginning of the reaction when the startle reflex kicks in. It doesn’t matter if you get hit; it matters that you panicked for a second while you tried to react. That’s part of the build-up of physical tension that accompanies the game’s psychological anxiety.

The Hunters in Resident Evil are the same way. They cross vast distances in one jump, but your reaction to them is hurriedly finding a horizontal axis to safely cross their straight leap. This allows you the time to turn around and launch grenades at them. The controls were slow, so the tension came from thinking quickly. Now, you can react more quickly, but the startle reaction is still there, so more of the mechanical tension has to come from the staging of the game-play sequence.

So, yeah, Velvet Buzzsaw is a psychological revenge-drama where art goes on a killing spree to avenge an exploited artist. Give it a look if you’re still interested. Even with everything spoiled, it’s worth watching. Hell, maybe I’ve been over-analyzing things this whole time, and you’ll find something different in viewing it. That’s the nice thing about art: we each get to decide its value to us. I’ll be back if I feel like I have something else worth saying. Until then, I’ll just be enjoying things for their own value, because…